Demystifying Big Query in Google Cloud

Big Query:

- Big Query is a really important part of the GCP Big Data suites.

- Big Query is a Cloud enterprise data warehouse.

- Petabyte scale

- Serverless

- Highly-scalable

- It has an in-memory BI engine, creatively called Big Query BI Engine

- This allows you to create very fast interactive reports, and dashboards, and then you can view these in data studio.

- It also has machine-learning capabilities

- Allowing you to build machine-learning models using SQL.

- There is also support for geospatial, data storage and processing.

Key features of Big Query:

- It is highly available.

- It supports Standard SQL.

- You can put your SQL skills to work directly.

- Big Query also handles Federated data, which is data that is stored outside of Big Query.

- There are automatic backups.

- There is support for governance and security, this is critical in today's world when there is such a focus on data security and personal privacy.

- There is also the separation of storage and computing.

- This leads to a number of really positive things, such as acid compliance, storage operations, cost-effective scalable storage, and stateless resilient computing.

Interact with Big Query?

- You can use the web console, which is available in your web browser.

- You can use the command line tool, specifically the BQ command line tool. When you install and initialize the Cloud SDK, you will have this tool included.

- BQ is a Python-based command line tool for Big Query.

- There are also a number of client libraries for programmatic access to Big Query, and there are libraries for C#, Go, Java, Node.JS, PHP, Python, and Ruby.

Big Query Data Sets

- All Big Query data assets ultimately reside in a project.

- Datasets are containers for tables and views.

- You're able to create many datasets within a project.

- You can think of a dataset as approximating a database.

- You create each native table, external table, or view within a specific dataset.

- Native tables are standard Big Query tables where the data is held within Big Query storage.

- External tables are tables that are backed by storage outside of Big Query.

- Table schemas are defined within Big Query, but the actual data resides outside views or virtual tables that are created by an SQL Query.

Ingest data into Big Query:

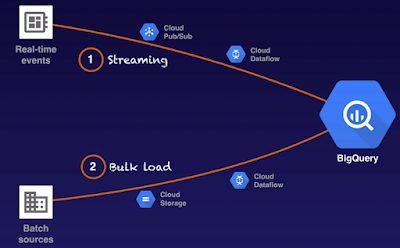

- There are 2 types of data sources for Big Query:

- Real-time data, and bulk data sources, such as files.

- Real-time events are streamed into Big Query, generally.

- A common pattern is to push the events to Cloud Pub/Sub, and then use a Cloud Dataflow job to process them and push them into Big Query.

- Batch sources of bulk loaded into Big Query.

- A common pattern is to push the files to Cloud Storage, and then have a Cloud Dataflow job, pick that data up, process it, and push it into Big Query.

Analyze and visualize the data using BI tools.

- These can be external tools like

- Tableau

- Looker

- Google Data Studio etc.

- Cloud Datalab

- You can interactively explore the data using Cloud Datalab.

- Google Sheets or Google Cloud Storage

- You can export to Google Sheets or Google Cloud Storage.

- Tools like Dataflow and Dataproc

- You can access the data from other GCP big data tools like Dataflow and Dataproc

- You can share the data with colleagues.

It's worth mentioning again how important Big Query is, Big Query occurs in many of the GCP reference architectures.

Jobs in Big Query

- A job is an action that Big Query runs on your behalf.

- Jobs are run asynchronously as they can take a long time to complete.

- Jobs can be polled for status to determine if they've started if they're in progress, or if they have completed.

- Not all actions in Big Query are executed as jobs

- Short-running actions are not assigned a job resource.

Four types of jobs.

- Load jobs.

- These load data into BigQuery.

- Export jobs.

- These export data from BigQuery,

- Query jobs:

- These allow you to query data within BigQuery.

- Copy jobs.

- These jobs are used to copy tables or data sets.

They are 2 priorities for jobs.

- There is the interactive priority and the batch priority.

- The interactive priority is the default.

- When running interactively, the query is executed as soon as possible, interactive queries do count toward your concurrent rate limit.

- That is the number of concurrent queries running at any time and towards your daily limit.

- That is the total number of queries that you can run per day.

- Query results for interactive queries are always saved to a table, either as a temporary table or as a permanent table, you are able to append or override data in an existing table, or you can push that information into a new table.

- Batch queries on the other hand are queued.

- The query is executed when there are, idle resources available in the BigQuery shared resource pool.

- If the query has not been executed within 24 hours, the job priority is changed to interactive. In this case, it'll run as soon as possible, and the materialization of query results can be used to lower costs and simplify complex queries.

How data is stored within BigQuery.

- BigQuery uses the capacitor column data format.

- Tables can be partitioned within BigQuery as with a relational database.

- Individual records exist as rows and each record is composed of a number of columns. Table

- schemas can be specified at the time of the creation of the table, or when you load data into the table.

- If we have a look here it's, as you would expect from a relational database, there are records and there are columns in this table and we can have multiple partitions of the table depicted here.

Capacitor storage system in Big Query.

- The capacitor storage system is extremely efficient

- It is a proprietary columnar data storage that supports semi-structured data. And by semi-structured data, we mean nested and repeated fields.

- Data is converted from the input format, which could be CSV / JSON to the capacitor format when it is loaded into Big Query.

- It stores data in its column the storage, which means it separates each record into column values and stores each record on a different storage volume Each column.

- In addition to its value, also stores two numbers, the values repetition level, and the definition level.

- This encoding allows the full or partial structure of a record to be reconstituted without reading the parent's columns.

- We can think of each column being separated and stored on a separate volume

- Remember each value is stored together with its repetition level and definition level.

- We can show this schematically by assuming row two, column five has the value New York with repetition level three and definition level five.

- How repetition level and definition level actually work.

- The definition and repetition level in coding is so efficient for semi-structured data that it has been used for other encodings such as the open-source Parquet encoding

De-normalization in Big Query.

- Big Query performance is optimized when data is denormalized appropriately.

- Denormalization refers to nested and repeated columns.

- Denormalization allows data to be stored in a very efficient manner, and it is also then retrieved in an efficient manner.

- If you want to use nested or repeated columns, you use the record data type.

- Let's look at this schematically.

- So here we have a table with three columns, the name, the ID, and the address column.

- Suppose we want to have the address column be a nested column. We would then assign it the record data type.

- And in that case, we can specify sub-columns, such as the name, the street, and the city.

- We can also have repeated values for each record.

- So in this example, for each name or person, we have multiple addresses stored And of course the repeated columns can also be nested.

Let's look at this example.

- So here we have a schema, we have information on a person. First name, last name, date of birth.

- And now we also have the addresses column, which is nested and repeated.

- So it's nested because you can see the sub-values of status, address, city, state, zip code, etcetera.

- And it is repeated because we can have multiple addresses for each ID.

- So when we look at the JSON representation of actual information, we can see for a single ID one, we have the first address over here.

- And the second address here, when importing data into Big Query, we need to keep in mind that there are a number of supported formats.

Formats to Upload data:

- The first one is CSV.

- This is the default source format for loading data into Big Query.

- Next, we have JSON.

- It's important that each record is newline delimited.

- Next, we have Avro,

- Avro is an open-source data format where the schema is stored together with the data.

- Avro is the preferred format for loading compressed data into Big Query.

- Then there is parquet,

- It is based on the dremmel storage approach.

- It's a good choice because Parquet's encoding is efficient and it typically results in smaller files.

- Then there is ORC or optimized row columnar format.

- ORC is an efficient way to store hive data.

- Then we have cloud Datastore exports and Firestore exports.

- All of the data from these formats are converted to the column, the format in BigQuery.

Big Query views.

- A view is a virtual table that is defined by an SQL query.

- If we have a dataset. All views exist within a dataset, and we have an SQL query, which defines the view, that SQL query will access data from a number of tables.

- We can create the view within the dataset

- When you have created a view, you can access that view in the same way that you query a table.

- So other SQL queries can then query that view.

- A view is unmaterialized.

- That means that the underlying query is executed each time the view is accessed. That will have billing implications.

Benefits of views

- They allow us to control access to data.

- When a user queries a view, they will only have access to the tables and fields that are part of the definition of that view.

- Views can be used to reduce overall query complexity.

- This is done by breaking a complex query into a number of simpler queries.

- Views can be used to construct logical tables.

- Logical tables can be used to organize similar information from different physical tables in Big Query.

- And finally, have the ability to create authorized views, authorized views allow users to have access to different subsets of rows from the view.

Big Query views limitations

- You cannot export data from a view because they are unmaterialized.

- You cannot use the JSON API to retrieve data from a view, and you cannot combine standard and legacy SQL.

- If you have defined a view using legacy SQL, you cannot query that view using standard SQL.

- The definition of a view cannot use user-defined functions.

- There can be no wild card table references in view definitions, and you're limited to 1000 authorized views per data set, a federated or external data source is a data source that you can query directly.

Data From External Sources

- Even though the data is not held within Big Query, Big Query offers support for querying data from Cloud Big table, cloud storage, and Google drive.

- There are two typical use cases.

- First is where we want to load and clean data in one pass.

- In this case, we changed the data from the external source before loading it into a Big Query table.

- The second used case is where you have small frequently changing data joined with other tables.

- An example of this is when you may be querying geographical data that changes from time to time.

- It is easier to change the locations within a document in Google drive than it is to change the data within the Big Query table.

Limitations that apply to external data sources.

- There is no guarantee of consistency.

- There is a lower query performance in general,

- You cannot use the Table Data List, API method.

- You cannot run export jobs on external data.

- You cannot reference external data in a wildcard table query.

- You cannot use Parquet or ORC formats for external data.

- The query results for external data sources are not caged.

- You're limited to four concurrent queries where external data is involved.

Data sources that are useful in Big Query.

- There are public data sets.

- These are accessible to everyone within Big Query.

- Then there are shared data sets.

- These are data sets that have been shared with you.

- And finally, you can also view Stack driver log information within Big Query.

- Big Query also allows you to bulk extract and analyze data from a number of additional data sources.

- Pulling bulk data into Big Query is easily facilitated using the data transfer service.

- The data transfer service has a number of connectors to Google services, to GCP services like cloud storage to AWS services like S3 and Redshift.

- They also connect us to other third-party systems such as Facebook, LinkedIn, and there are many others.

- These bulk extractions can be one-off events or they can be scheduled to run repeatedly.

- The data transfer service allows for the historical restatements of data and has an uptime and delivery SLA.

- You can access the Big Query data transfer service using the cloud console, the Big Query command line tool, or the Big Query data transfer service API.

No comments:

Post a Comment