Artificial Neural Networks

and

Scalars, Vectors, Matrices, and Tensors

Scalar:

- A Scalar is simply a number.

- It has 0 dimensions.

- On a a slightly more technical level, a Scalar is invariance under transformations of the coordinate system.

Vector:

- A vector is a list of numbers stacked in a column.

- If you remember from school maths or physics,

- vectors are quantities that have both magnitude and direction.

- The example shown here is a 2x1 dimensional vector, where the number of rows is given, and then the number of columns is given.

- Vectors have the general form M x 1, which means you can have many rows but only a single column.

Matrices:

- A matrix, on the other hand, is a collection of numbers that are ranged into rows and columns.

- Matrices are able to interact with or change vectors.

- In this example here, we have a 2x2 matrix with 2 rows and 2 columns.

- The general structure of a matrix would be M times N, meaning you can have many rows and many columns.

- A tensor is similar to a matrix but it allows for an arbitrary number of dimensions.

- You can think of a tensor as a multi-dimensional matrix.

Tensor:

- In above screenshot, we have a 2 x 2 x 4 tensor.

- You can think of this as four 2 x 2 matrices stacked on top of each other.

- TensorFlow is called TensorFlow because tensors represent the flow of information between the layers of a neural network.

- The input to a neural network is represented as a tensor.

- We could of tensors as having the general form M x N x P, but as I mentioned we can have an arbitrary number of dimensions.

A vector is a special case of a matrix. Vectors and matrices can be considered as special cases of a tensor. Leaving complexities aside,a scaler can be considered as a special case of a vector.

Deep artificial neural networks:

- Deep artificial neural networks often perform better than other types of models. Although they can be harder to build and train.

- Neural networks are composed of a number of different layers.

- Input layer: This is the layer that allows us to feed data into the model.

- Output layer: This represent the way we want the neural network to provide answers.

- In a classification problem, we'll have a neuron for each of the available classes.

- hidden layers: In between the input and the output layer, there are number of so called hidden layers.

- The number of hidden layers will vary based on the type of problem you are trying to solve.

- The number of hidden layers is an example of model hyperparameter.

- It is customary to refer to the input layer as layer 0, and then have subsequent layers, sequentially numbered until we reach the output layer.

- In this case, our output layer is layer 6. Since we started at 0, we actually have 7 layers.

- Each layer will also have a specified number of neurons or nodes.

- In this example, our input layer has 5 nodes. And our first layer has 4 nodes.

- We need to represent our input data in such a way that it can be fed into the artificial neural network.

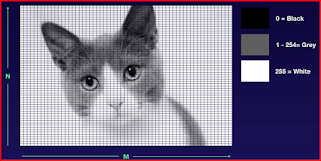

Example:

- A 2 dimensional gray scale white image can be represented as an N times M matrix.

- A matrix would represent the number of pixels in the horizontal and vertical plain.

- Each pixel would be represented as a value within the matrix.

- With a byte image, 0 would represent black, and 255 represents white and Values between 0 and 255 represents gray pixels.

- Remember normalization here, if we values between 0 and 255, it makes sense to divide all values by 255 to get elements in an inclusive range from 0 to 1.

Fully-connected layer:

- We can have the scenario where every neuron of 1 layer is connected to every neuron in the following layer.

- Fully connected layers are also known as dense layers.

Partially connected layers:

- There are other types of layers where every neuron in one layer is not connected to every neuron in the adjacent layer.

- These types of layers can play many different roles within an artificial neural network.

Python program builds a neural network to perform a regression.

- We import the TensorFlow library along with the numpy library. Numpy is a Python library for mathematical operations that supports multi-dimensional matrices or tensors.

- we are building the neural network. We have a dense or fully connected layer. Our input is a tensor with only one dimension.

- The output layer has a single neuron denoted by the number of units.

- We compile the model, which essentially prepares it for training.

- We specify what algorithm will be used to optimize the model on the training data.

- SGD stands for Stochastic Gradient Descent, which is a variant of gradient descent.

- You also specify the loss. That is how the loss is to be measured. This is a function that needs to be minimized.

- Setting up our training data. In this simple example, our data represents the function f of x equals 2 x minus 1.

- Each training sample has a single input feature that is the train x array. Each sample also has a single associated label.

- We use model.fit to actually train the model. We specify the number of epochs, that is the number of times our training data will be used to train the model.

- Finally, we use our trained model to make a prediction. We use the predict method to determine what our output value, expected value should be.

No comments:

Post a Comment