Google's Cloud Pub/Sub.

Pub/Sub handles messaging and event ingestion at a global level.

Why we would use messaging middleware in the first place?

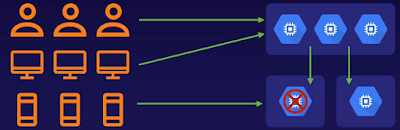

Let's imagine a scenario where we don't use any sort of messaging middleware layer. Say, we have some users who we want to serve with our app. The communication between the 2 can be direct. The user talks directly to the app, maybe through a web browser, but it's a direct link from one to the other. Maybe in addition to our user, there's another app that needs to talk to our initial app. Maybe it's running in another cloud or in a data center, but again, it talks directly to our app. You might already be thinking of some problems with this design. All of these direct connections don't seem to have much resilience built into them.

Now let's say our app gets popular.

We're getting more users and more third-party apps connecting to us, but that's okay. We know how to auto-scale in GCP. So we add more compute to handle the load. Now let's say we have a backend app in our stack and maybe there are some mobile devices that need to send data directly to this part of the stack, but it's dependent on some data from the frontend. It can't act until it's got both pieces of information and maybe there's a further component to our stack that relies on the outcome of the second component, but it can only talk to the frontend because of its geolocation. This is all starting to look a bit tenuous. What happens if this part breaks? Well, there goes our mobile data and now the frontend can't finish what it's supposed to be processing, so it starts blocking. Eventually, this causes our original application to start crashing. We're left with a system that can barely handle its frontend requests and the response to those requests is probably just, please try again later.

So where did it all go wrong?

- Well, the fundamental problem with this design is dependency.

- Every component is dependent on some other component in order for it to function.

- And often, it's relying on things to happen in a certain order that can't be guaranteed.

- The second that one component has a problem, well, the whole house of cards comes tumbling down.

Solution

- Solution to this design problem is to introduce messaging middleware or a message bus

- This is simply a layer that we introduce that handles all of the messages that used to be passed directly between each component.

- There are different models of message bus, but Google pub/sub is a publish/subscribe model.

- In this model, our message bus can be split into different groups of messages.

In Pub/Sub, we call these Topics.

- Anything can publish a message to a topic or choose to receive a message from a topic.

- So now information from our users and other apps is published to a topic which can be consumed by our application.

- Our user client can also be updated to simply pull information back from a topic when it needs to.

- Mobile data can be sent to another topic to be consumed by our backend application.

- We can have a separate topic that we choose to act on only when there are messages there ready for us to receive.

- In this way, we've loosely coupled our services with a message bus and in doing so, we've introduced resilience in the event of a single component failure.

- Because in our case, Pub/Sub is a global fully-managed service, messages can simply queue in the topic, ready to be consumed again when a component is restored.

- In this way, you can think of Pub/Sub as a kind of shock absorber for your systems.

- Pub/Sub works equally well for exchanging messages and data around a distributed system, as well as simple triggers and events, acting on certain actions and triggering other actions to occur.

Cloud Pub/Sub

- Pub/Sub is Google's global messaging and event ingestion service.

- Using the publisher/subscriber model, it can handle messages or events consistently in multiple regions as a server-less, No-Ops, fully-managed service.

- There is nothing to provision. You simply consume the Pub/Sub API, which like many other GCP products,

- It is a public-facing version of a tool Google has been using and developing internally for years.

- Google's internal Pub/Sub handles messages and events for ads, Gmail, and search, processing up to 500 million messages per second, totaling over one terabyte per second of data.

Cloud Pub/Sub - features:

- Includes multiple publisher and subscriber patterns.

- There are different relationships you can set up between publishers and subscribers, such as one to many, many to one, and many to many.

- Pub/Sub guarantees at-least-once delivery of every message.

- In fact, due to its distributed and globally replicated nature, there's a tiny chance you might get a message more than once, although this is rare.

- You can process messages in real-time or batches depending on your design pattern with exponential back off for publishing and push subscriptions.

- And if you need more advanced time windowing or exactly once processing, you can integrate Pub/Sub with Cloud Dataflow.

Publishing messages to Pub/Sub

- You just create a message containing your data, this is going to be a JSON payload, that's base64 encoded.

- The total size of the payload needs to be 10MB or less.

- Then you send the payload as a request to the Pub/Sub API specifying the topic to which the message should be published.

Receiving messages from Pub/Sub.

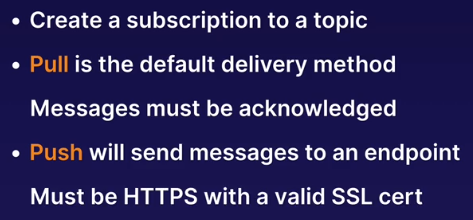

- You simply create a subscription to a topic.

- Subscriptions are always associated with a single topic.

- There are 2 types of delivery method for subscriptions.

- Pull is the default method.

- When you have created a pull subscription, you can make ad hoc pull requests to the Pub/Sub API, specifying your subscription to receive messages associated to that subscription.

- When you receive a message, note that you have to acknowledge that you've received it.

- If you don't, that message will remain at the top of the queue associated with your subscription, and you won't get the next message, or any messages after that, until you've acknowledged it.

- Alternatively, you can configure a push subscription, which will automatically send new messages to an endpoint that you define.

- The endpoint must use the HTTPS protocol with a valid SSL certificate. And of course, something has to sit on the end of that endpoint that is capable of receiving and processing a message.

No comments:

Post a Comment