Use Apache Spark in Azure Databricks

One of the benefits of Spark is support for a wide range of programming languages, including Java, Scala, Python, and SQL; making Spark a very flexible solution for data processing workloads including data cleansing and manipulation, statistical analysis and machine learning, and data analytics and visualization. Azure Databricks is built on Apache Spark and offers a highly scalable solution for data engineering and analysis tasks that involve working with data in files.

Step 1. Setup Azure Databricks Workspace and open notebook

Steps are available: https://saurabhsinhainblogs.blogspot.com/2024/01/azure-databricks-lab-how-to-start-with.html

- Connect to Azure Portal

- Setup Azure Datbricks

- Setup Cluster for Azure Databricks

- Open Notebook

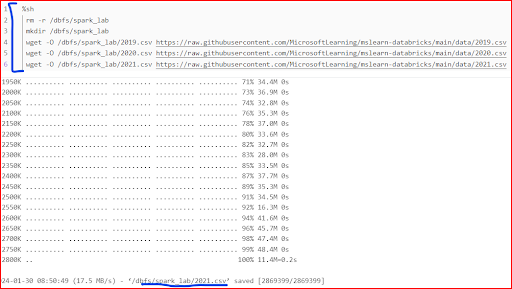

Step 2. Prepare data to consume

Shell commands can also use to download data files from GitHub into the Databricks file system (DBFS)

Code:

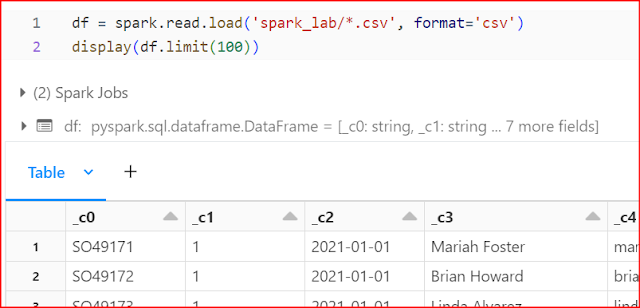

Step 3. Load the data frame and view the data

- Data doesn't include the column headers

- Information about the data types is missing

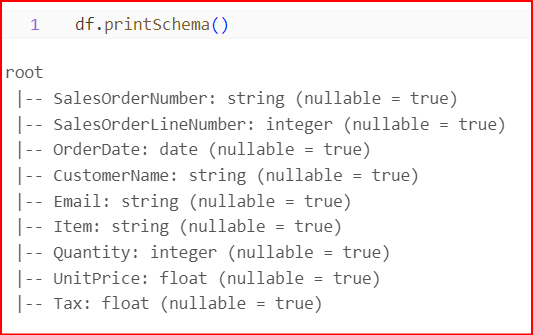

Step 3. Define a schema for the data frame

Observe that this time, our below problems are solved

- The data frame includes column headers

- Information about the data types is also available

Code:

Step 4. Filter a data frame

- Filter the columns of the sales orders data frame to include only the customer name and email address.

- When you perform an operation on a data frame, the result is a new data frame (in this case, a new customer data frame is created by selecting a specific subset of columns from the df data frame)

- The dataframe['Field1', 'Field2', ...] syntax is a shorthand way of defining a subset of column. You can also use select method, so the first line of the code above could be written as customers = df.select("CustomerName", "Email")

- Count the total number of order records

- Count the number of distinct customers

- Display the distinct customers

- Dataframes provide functions such as count and distinct that can be used to summarize and filter the data they contain.

Code:

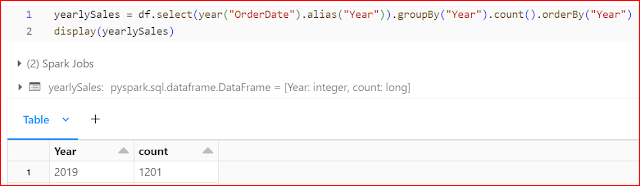

Step 5. Aggregate and group data in a data frame

Code:

Show the number of sales orders per year. Note that the select method includes a SQL year function to extract the year component of the OrderDate field, and then an alias method is used to assign a column name to the extracted year value. The data is then grouped by the derived Year column and the count of rows in each group is calculated before finally the orderBy method is used to sort the resulting dataframe.

Code:

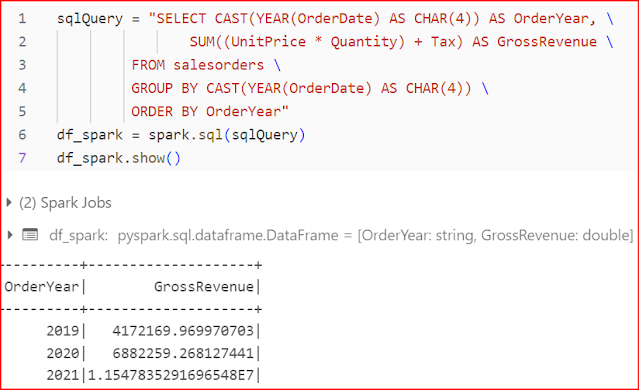

Step 6. Query data using Spark SQL

- The native methods of the data frame object you used previously enable you to query and analyze data quite effectively. However, many data analysts are more comfortable working with SQL syntax.

- Spark SQL is a SQL language API in Spark that you can use to run SQL statements, or even persist data in relational tables.

- The code you just ran creates a relational view of the data in a data frame, and then uses the spark.sql library to embed Spark SQL syntax within your Python code query the view and return the results as a data frame.

Code:

- The ``%sql` line at the beginning of the cell (called a magic) indicates that the Spark SQL language runtime should be used to run the code in this cell instead of PySpark.

- The SQL code references the salesorder view that you created previously.

- The output from the SQL query is automatically displayed as the result under the cell.

Step 7. Visualize data with Spark

- Azure Databricks include support for visualizing data from a data frame or Spark SQL query.

- It is not designed for comprehensive charting.

- You can use Python graphics libraries like Matplotlib and Seaborn to create charts from data in data frames.

- Visualization type: Bar

- X Column: Item

- Y Column: Add a new column and select Quantity. Apply the Sum aggregation.

- Save the visualization and then re-run the code cell to view the resulting chart in the notebook.

Step 8. Use Matplotlib in Databricks

- The matplotlib library requires a Pandas dataframe, so you need to convert the Spark dataframe returned by the Spark SQL query to this format.

- At the core of the matplotlib library is the pyplot object. This is the foundation for most plotting functionality.

Code:

A figure can contain multiple subplots, each on its own axis

Code:

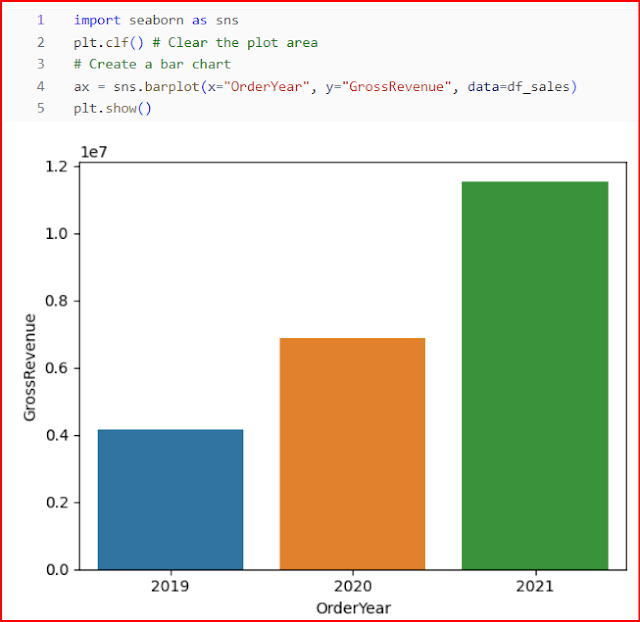

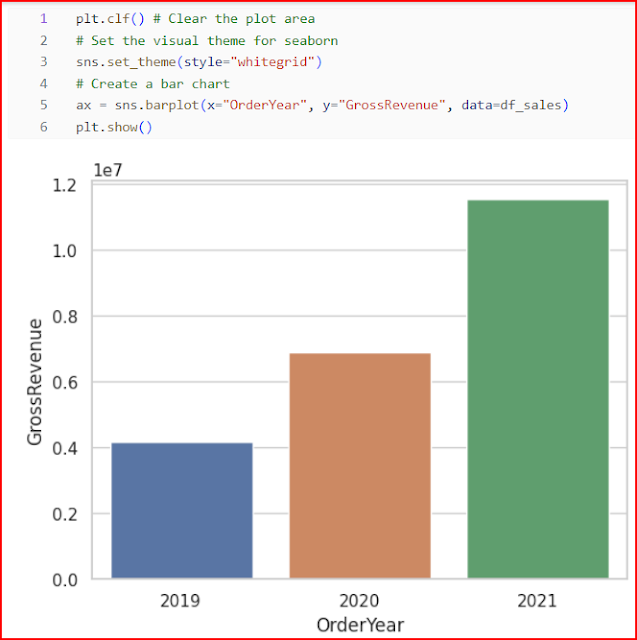

Step 9. Use the Seaborn Library

Using the seaborn library (which is built on matplotlib and abstracts some of its complexity) to create a chart:

Code:

Seaborn library makes it simpler to create complex plots of statistical data, and enables you to control the visual theme for consistent data visualizations.

Code:

Like matplotlib. seaborn supports multiple chart types

Code:

Step Last. Cleanup Resources

- In the Azure Databricks portal, on the Compute page, select your cluster and select ■ Terminate to shut it down.

- If you’ve finished exploring Azure Databricks, you can delete the resources you’ve created to avoid unnecessary Azure costs and free up capacity in your subscription.

![customers = df['CustomerName', 'Email'] print(customers.count()) print(customers.distinct().count()) display(customers.distinct()) Filter a dataframe](https://blogger.googleusercontent.com/img/a/AVvXsEg_dfgdeZgj3OFE503lu_foJCuLthz7Pu_-E6zN4khy6F7WIVIwhI7Sx9xmjzLmNA70Ia2ITkG6dMf1e_50HhZl_lSMkiUlDIbSpROpCG_-aDkvsyx1ETSOLTdM9eyT6wRiI5wWq_aNm2PILzDYNMIiMutOEpgZnC8Qokc6zs-rx3gtyQKFHVX3IUuLsH4=w640-h394-rw)

![customers = df.select("CustomerName", "Email").where(df['Item']=='Road-250 Red, 52') print(customers.count()) print(customers.distinct().count()) display(customers.distinct()) Selec and where in pyspark databricks](https://blogger.googleusercontent.com/img/a/AVvXsEi35IO2l82MD5Wckdw2OyTSk1AJdS3tycDtCbUfVV5ioZowWmutMznlbxdZLdYt6j5UCrv5OLdGIO4bL-aygqrtyo4sCKZh-pOUz2f-SjpxK8h0UnYfjrGhsjmrnbNMtHybQMIWffLcK7tT0Uy1P9_ysw14T0dFo723i0BKS_97LVt_XzQXjnvU6tVy2gc=w640-h308-rw)

![from matplotlib import pyplot as plt # matplotlib requires a Pandas dataframe, not a Spark one df_sales = df_spark.toPandas() # Create a bar plot of revenue by year plt.bar(x=df_sales['OrderYear'], height=df_sales['GrossRevenue']) # Display the plot plt.show() matplotlib databricks](https://blogger.googleusercontent.com/img/a/AVvXsEiwvrwY3fADKnQGA-FeasOXTFsjTGrpUFu05wCurZMUNqt6TN3HZ0cFE6Ffa3ynbJwPrsv9W0nlgK72besXVubmaLR6f5DPS200vd0AN9_Y94WVDyp49n4bslDuxB50vqoadfOV9eGTuT21y91M2tJfwT1010rsKQC9_fwZWyC7oCBMxpC8yrcfNDpnzfk=w573-h640-rw)

![plt.bar(x=df_sales['OrderYear'], height=df_sales['GrossRevenue'], color='orange') # Customize the chart plt.title('Revenue by Year') plt.xlabel('Year') plt.ylabel('Revenue') plt.grid(color='#95a5a6', linestyle='--', linewidth=2, axis='y', alpha=0.7) plt.xticks(rotation=45) Customize the subplot in Azure Synapses](https://blogger.googleusercontent.com/img/a/AVvXsEijrLuOyP-3_rmn8AocZGx32_DnPZRhupUqhdAoebpWl9mq9Hyo3osiUZHTqlc1yrLecmsdubdaG23DLHTL-5jpGsZSmR0Cyp0X8eBXd3YulbvmZLKYZ1t-KYbfot3ExfXbMRQZipsqTfcOqtF2Ps7VBCHYhHT36peowzkVauaNhNCsrelhlg5KbGy4Q8Q=w584-h640-rw)

![# Create a Figure fig = plt.figure(figsize=(8,3)) # Create a bar plot of revenue by year plt.bar(x=df_sales['OrderYear'], height=df_sales['GrossRevenue'], color='orange') # Customize the chart plt.title('Revenue by Year') plt.xlabel('Year') plt.ylabel('Revenue') plt.grid(color='#95a5a6', linestyle='--', linewidth=2, axis='y', alpha=0.7) plt.xticks(rotation=45) # Show the figure Implicit and explicit subplots in data bricks](https://blogger.googleusercontent.com/img/a/AVvXsEgzxNXfAwf4gLpfefviVIXbes7HkqKneCzG34A4c9JgXsJmGqF_C65zrLZ32W1S9znbjaxTZrCwBb1Hs_RsN8R7v8cwskblXgOnCZSX7tMmcrGHSpRgC6nQapQfc09M0nNUtCj2_Q17MmwFFbJKgaJclcfy0zOQ9poUDkBuaEAtYoxZFf9kITfxdH7eR7o=w640-h589-rw)

![# Clear the plot area plt.clf() # Create a figure for 2 subplots (1 row, 2 columns) fig, ax = plt.subplots(1, 2, figsize = (10,4)) # Create a bar plot of revenue by year on the first axis ax[0].bar(x=df_sales['OrderYear'], height=df_sales['GrossRevenue'], color='orange') ax[0].set_title('Revenue by Year') # Create a pie chart of yearly order counts on the second axis yearly_counts = df_sales['OrderYear'].value_counts() ax[1].pie(yearly_counts) ax[1].set_title('Orders per Year') ax[1].legend(yearly_counts.keys().tolist()) # Add a title to the Figure fig.suptitle('Sales Data') # Show the figure multiple subplots Azure databricks](https://blogger.googleusercontent.com/img/a/AVvXsEgIpsHzUiDxfYU_h3WzdDVkMDQimvy9mTZcWWGRrtPnMXzlo_VKvh8OgcShSSewGBQTrJOvFKpGe73Y8ZVBhnk7RdYhm7bqPb9NeGqq874Z1xHZ2a2XljIhBR-oM37zSMjo1EpNYtGsUOf64qn4JkSdGXkcVlcmxRqlWgwNunNq3KhNX9NGThygtPXVjWw=w640-h600-rw)

No comments:

Post a Comment