Ingest data into the Lakehouse using Fabric

In this exercise, you ingest additional dimensional and fact tables from the Wide World Importers (WWI) into the lakehouse.

Microsoft Fabric Labs

- Azure Microsoft Fabric - Lab -1 for Beginners:

- Azure Microsoft Fabric - Auto Power BI Reports Lab -2:

- Microsoft Fabric - Ingest data into the Lakehouse Lab - 3:

- Microsoft Fabric - Lakehouse and Data Transformation - Lab - 4:

- Microsoft Fabric and Power BI Reports Lab - 5:

- Microsoft Fabric - Resource Cleanup in Lab -6

Task 1: Ingest data

In this task, you use the Copy data activity of the Data Factory pipeline to ingest sample data from an Azure storage account to the Files section of the lakehouse you created earlier.

Now, click on Fabric Lakehouse Tutorial-XX on the left-sided navigation pane.

- In the Fabric Lakehouse Tutorial-XX workspace page, click on the drop-down arrow in the +New button, then select Data pipeline .

- In the New pipeline dialog box, specify the name as IngestDataFromSourceToLakehouse and select Create. A new data factory pipeline is created and opened

- On newly created data factory pipeline i.e IngestDataFromSourceToLakehouse, select Add pipeline activity to add an activity to the pipeline and select Copy data.

- This action adds copy data activity to the pipeline canvas.

- Select the newly added copy data activity from the canvas.

- Activity properties appear in a pane below the canvas.

- Select Expand(upward arrow) in the Properties bar as in the below Screenshot.

- Under the General tab in the properties pane, specify the name for the copy data activity as Data Copy to Lakehouse .

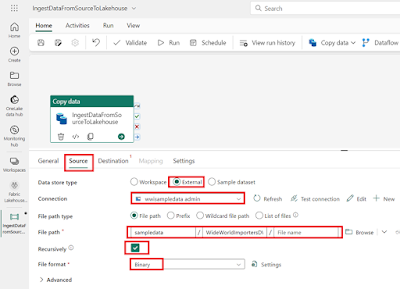

- Under Source tab of the selected copy data activity, select External as Data store type and then select + New to create a new connection to data source.

- For this task, all the sample data is available in a public container of Azure blob storage. You connect to this container to copy data from it.

- On the New connection wizard, select Azure Blob Storage and then select Continue.

|

Property |

Value |

|

Account name or

URI |

https://azuresynapsestorage.blob.core.windows.net/sampledata |

|

Connection |

Create new

connection |

|

Connection name |

wwisampledata |

|

Authentication

kind |

Anonymous |

|

Property |

Value |

|

Data store type |

External |

|

Connection |

wwisampledata |

|

File path type |

File path |

|

File path |

Container name

(first text box): sampledata |

|

File path |

Directory name

(second text box): WideWorldImportersDW/parquet |

|

Recursively |

Checked |

|

File Format |

Binary |

|

Property |

Value |

|

Data store type |

Workspace |

|

Workspace data

store type |

Lakehouse |

|

Lakehouse |

wwilakehouse |

|

Root Folder |

Files |

|

File path |

Directory name

(first text box): wwi-raw-data |

|

File Format |

Binary |

- You have finished configuring the copy data activity. Select the Save button on the top ribbon (under Home) to save your changes.

- You will see a notification stating Saving is completed.

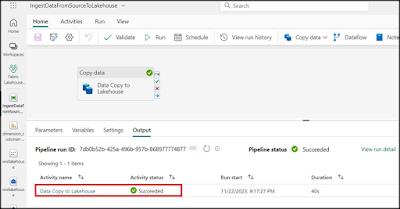

- In the IngestDataFromSourceToLakehouse page select Run to execute your pipeline and its activity.

- This action triggers data copy from the underlying data source to the specified lakehouse and might take up to a minute to complete.

- You can monitor the execution of the pipeline and its activity under the Output tab, which appears when you click anywhere on the canvas.

- Under the Output tab, hover your mouse to Data Copy to Lakehouse row, select Data Copy to Lakehouse to look at the details of the data transfer. After seeing the Status as Succeeded, click on the Close button.

- Now, click on Fabric Lakehouse Tutorial-XX on the left-side navigation pane and select your new lakehouse (wwilakehouse) to launch the Lakehouse explorer as shown in the below image.

- Validate that in the Lakehouse explorer view, a new folder wwi-raw-data has been created. Now expand the wwi-raw-date select full folder then select fact_sale_1y_full and data for all the files have been copied there.

- Microsoft Fabric - Lakehouse and Data Transformation - Lab - 4:

No comments:

Post a Comment